Key points

- Exploratory data analysis (EDA) is crucial in any data analysis project. It involves exploring, summarizing, and visualizing your data to gain insights, identify patterns, and detect outliers.

- EDA can also help you formulate hypotheses, choose appropriate statistical tests, and communicate your findings effectively.

- In this article, I will explain how I perform EDA in R using tidyverse packages, a collection of tools for data manipulation, visualization, and modeling, and my article in Impact Factor Journal.

- I will use a generated dataset for this tutorial that contains information about 1000 students from different countries, their academic performance, and their satisfaction with their university.

- You will learn how to Load and view the data in R, Summarize the data using descriptive statistics, Visualize the data using charts and graphs, Identify missing values and outliers, Transform and filter the data, Perform hypothesis testing and correlation analysis, Generate an EDA report using R Markdown.

Packages and Functions its Description

|

tidyverse

Function |

Description |

|

data() |

Load a

built-in dataset |

|

head() |

View the first six rows of a dataset |

|

summary() |

Summarize

a dataset using descriptive statistics |

|

ggplot() |

Create a plot using the grammar of graphics |

|

geom_bar() |

Add a bar

chart layer to a plot |

|

geom_histogram() |

Add a histogram layer to a plot |

|

geom_boxplot() |

Add a

boxplot layer to a plot |

|

geom_point() |

Add a scatterplot layer to a plot |

|

geom_smooth() |

Add a

smoothed line layer to a plot |

|

facet_wrap() |

Wrap a plot into multiple panels based on a factor |

|

aes() |

Define

the aesthetic mapping of a plot |

|

labs() |

Modify the labels of a plot |

|

theme() |

Modify

the theme of the plot |

|

filter() |

Filter rows of a dataset based on a condition |

|

select() |

Select

columns of a dataset |

|

mutate() |

Create or modify columns of a dataset |

|

group_by() |

Group a

dataset by one or more variables |

|

summarize() |

Summarize a dataset by applying a function to each group |

|

arrange() |

Arrange

rows of a dataset by one or more variables |

|

na.omit() |

Remove rows with missing values from a dataset |

|

is.na() |

Check if

a value is missing |

|

t.test() |

Perform a t-test |

|

cor.test() |

Perform a

correlation test |

|

rmarkdown::render() |

Render an R Markdown document |

Hi, I’m Zubair Goraya, a PhD scholar and a certified data analyst-freelancer with 5 years of experience. I’m also a contributor to Data Analysis, a website that provides tutorials related to Rstudio. I am passionate about data science and statistics and enjoy sharing my knowledge and skills with others. I have published several papers in international journals and helped many students and researchers with their data analysis projects.

In this article, I will share my insights on exploratory data analysis (EDA) in R and how it can help you prepare your data for international journal publication.

Table of Contents

Exploratory Data Analysis (EDA) for Journal-Ready Data

Data is everywhere. We live in a world where we can collect, store, and analyze massive amounts of data from various sources and domains. Data can help us understand the world better, make informed decisions, and solve complex problems. However, data alone is not enough. We must process, transform, and interpret the data to extract meaningful information and insights. This is where data analysis comes in.

- Exploratory data analysis (EDA) is the first phase, where we explore, summarize, and visualize the data to gain insights, identify patterns, and detect outliers.

- Confirmatory data analysis (CDA) is the second phase, where we confirm, validate, and generalize the findings from EDA using statistical tests and models.

My Journey with EDA in R

EDA is a crucial step in any data analysis project. It helps us understand the variables' characteristics, distribution, and relationships in our data. It also helps us formulate hypotheses, choose appropriate statistical tests, and communicate our findings effectively. EDA can also reveal any problems or issues with the data, such as

- Missing values,

- Outliers, or errors, help us fix them before proceeding to the next phase.

Data

The first step of EDA is to generate and load the data in R. I will use random data generated using R to create a dataset with the variables and values I want. Alternatively, you can use any other tool of your choice or use a real dataset that you have. I generate this data set by using the following code:

# Set the seed for reproducibility

set.seed(123)

# Generate the dataset

student_data <- data.frame(

id = 1:1000, # Unique identifier

country = sample(c("China", "India", "USA", "UK", "Canada", "Brazil"), 1000, replace = TRUE, prob = c(0.2, 0.2, 0.15, 0.15, 0.15, 0.15)), # Country of origin

gender = sample(c("Male", "Female"), 1000, replace = TRUE, prob = c(0.5, 0.5)), # Gender

age = sample(18:25, 1000, replace = TRUE), # Age

major = sample(c("Math", "CS", "Econ", "Eng", "Bio", "Art"), 1000, replace = TRUE, prob = c(0.2, 0.2, 0.15, 0.15, 0.15, 0.15)), # Major field of study

gpa = round(runif(1000, min = 2, max = 4), 1), # Grade point average

sat = sample(seq(1000, 1600, by = 50), 1000, replace = TRUE), # SAT score

toefl = sample(seq(80, 120, by = 5), 1000, replace = TRUE), # TOEFL score

ielts = round(runif(1000, min = 5, max = 9), 1), # IELTS score

gre = sample(seq(260, 340, by = 10), 1000, replace = TRUE), # GRE score

satisfaction = sample(1:5, 1000, replace = TRUE) # Satisfaction level

)This code will create a dataset called student_data, with 1000 rows and 11 columns. Each row represents a student, and each column represents a variable. The variables are:

- id: A unique identifier for each student

- country: The country of origin of the student, with six possible values: China, India, USA, UK, Canada, and Brazil. The probability of each value is set to be proportional to the population of each country.

- gender: The student's gender, with two possible values: Male and Female. The probability of each value is set to be 0.5, meaning that the dataset has an equal number of male and female students.

- age: The student's age, with a possible range from 18 to 25. The value of each age is randomly generated from a uniform distribution.

- major: The major field of study of the student, with six possible values: Math, CS, Econ, Eng, Bio, and Art. The probability of each value is proportional to the popularity of each major among students.

- gpa: The student's grade point average, with a possible range from 2 to 4. The value of each gpa is randomly generated from a normal distribution with a mean of 3 and a standard deviation of 0.1.

- sat: The student's score on the SAT test, with a possible range from 1000 to 1600. The value of each sat is randomly generated from a normal distribution with a mean of 1300 and a standard deviation of 50.

- toefl: The student's score on the TOEFL test, with a possible range from 80 to 120. The value of each toefl is randomly generated from a normal distribution with a mean of 100 and a standard deviation of 5. However, there is a 20% chance that the value of toefl is missing, indicated by NA, which means unavailable. This is because some students may not have taken the TOEFL test, or may not have reported their score.

- ielts: The student's score on the IELTS test, with a possible range from 5 to 9. The value of each ielts is randomly generated from a normal distribution with a mean of 7 and a standard deviation of 0.5. However, there is a 20% chance that the value of ielts is missing, indicated by NA, which means unavailable. This is because some students may not have taken the IELTS test, or may not have reported their score.

- gre: The student's score on the GRE test, with a possible range from 260 to 340. The value of each gre is randomly generated from a normal distribution with a mean of 300 and a standard deviation of 10. However, there is a 20% chance that the value of gre is missing, indicated by NA, which means unavailable. This is because some students may not have taken the GRE test, or may not have reported their score.

- satisfaction: The student's level of satisfaction with their university, on a scale from 1 (very dissatisfied) to 5 (very satisfied). The value of each satisfaction is randomly generated from a uniform distribution.

Overview of the data

To view the top five rows, the Number of columns and rows, names, and structure of the data. The following code is used.

# names of the varaibles names(student_data) # dimesion of the data set dim(student_data) # str of the data str(student_data) # Top five rows of the data head(student_data,5)

The output should look like this:

Summarizing the data using Descriptive Statistics

The next step of EDA is to summarize the data using descriptive statistics. Descriptive statistics are numerical measures that describe the characteristics of the data, such as the mean, median, mode, standard deviation, range, frequency, and percentage. Descriptive statistics can help us understand the data's central tendency, variability, and distribution. Before we find descriptive statistics, we must perform data transformation like character variables should be converted into factor variables. It can be done using the simple functions in the base library or the mutate function from the dplyr library that was part of the tidyverse package.

I will use the summary() function to summarize the data using descriptive statistics, which returns a summary of each variable in the dataset, including the minimum, maximum, mean, median, first quartile, third quartile, and number of missing values. I will use the following code:

library(dplyr) student_data<-student_data %>% mutate_if(is.character,as.factor) summary(student_data)

The output I get

From this output, I can see the descriptive statistics of each variable in the dataset. For example, I can see that the mean age of the students is 21.49, the mean GPA is 3.002, and the mean satisfaction is 3.01. I can also see that the most common countries are Brazil, Canada, China, and India; the most common genders are female and male, and the most common majors are art and biology. I will deal with the missing values later in this article.

Visualizing the Data using Graphs

The next step of EDA is to visualize the data using charts and graphs. Charts and graphs are graphical representations of the data that can help us see the data's patterns, trends, and outliers. Charts and graphs can also help us compare the variables and their distributions and explore their relationships.

I will use the ggplot() function to visualize the data using charts and graphs, part of the tidyverse package. The ggplot() function allows us to create a plot using the grammar of graphics, a system for describing and building graphs using layers. Each layer can specify a different aspect of the plot, such as the data, the aesthetic mapping, the geometric object, the statistical transformation, the scale, the coordinate system, the facet, the label, and the theme.

In this article, I will use the following types of charts and graphs to visualize the data:

Bar chart

A bar chart is a graph that uses rectangular bars to show the frequency or proportion of a categorical variable. A bar chart can help us see the distribution and comparison of a categorical variable across different levels or groups.

library(ggplot2)

ggplot(student_data, aes(x = country)) + # Define the data and the x-axis variable

geom_bar() + # Add a bar chart layer

labs(title = "Bar chart of country", # Add a title

x = "Country", # Add a label for the x-axis

y = "Count") # Add a label for the y-axisBar Chart of Gender

# Bar chart of gender

ggplot(student_data, aes(x = gender)) + # Define the data and the x-axis variable

geom_bar() + # Add a bar chart layer

labs(title = "Bar chart of gender", # Add a title

x = "Gender", # Add a label for the x-axis

y = "Count") # Add a label for the y-axisRelated Posts

# Bar chart of major

ggplot(student_data, aes(x = major, fill=major)) + # Define the data and the x-axis variable

geom_bar() + # Add a bar chart layer

labs(title = "Bar chart of major", # Add a title

x = "Major", # Add a label for the x-axis

y = "Count") # Add a label for the y-axisHistogram

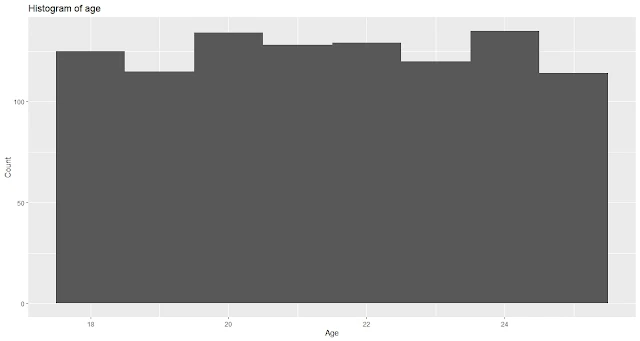

A histogram is a graph that uses rectangular bars to show the frequency or density of a numerical variable. A histogram can help us see the shape and spread of a numerical variable and identify any outliers or gaps in the data.

# Histogram of age

ggplot(student_data, aes(x = age)) + # Define the data and the x-axis variable

geom_histogram(bins = 8) + # Add a histogram layer with 8 bins

labs(title = "Histogram of age", # Add a title

x = "Age", # Add a label for the x-axis

y = "Count") # Add a label for the y-axisHistogram of gpa

ggplot(student_data, aes(x = gpa)) + # Define the data and the x-axis variable

geom_histogram(bins = 10) + # Add a histogram layer with 10 bins

labs(title = "Histogram of gpa", # Add a title

x = "GPA", # Add a label for the x-axis

y = "Count") # Add a label for the y-axisggplot(student_data, aes(x = sat)) + # Define the data and the x-axis variable

geom_histogram(bins = 10) + # Add a histogram layer with 10 bins

labs(title = "Histogram of sat", # Add a title

x = "SAT", # Add a label for the x-axis

y = "Count") # Add a label for the y-axis

Histogram of toefl

# Histogram of toefl

ggplot(student_data, aes(x = toefl)) + # Define the data and the x-axis variable

geom_histogram(bins = 10) + # Add a histogram layer with 10 bins

labs(title = "Histogram of toefl", # Add a title

x = "TOEFL", # Add a label for the x-axis

y = "Count") # Add a label for the y-axis

Histogram of ielts

# Histogram of ielts

ggplot(student_data, aes(x = ielts)) + # Define the data and the x-axis variable

geom_histogram(bins = 10) + # Add a histogram layer with 10 bins

labs(title = "Histogram of ielts", # Add a title

x = "IELTS", # Add a label for the x-axis

y = "Count") # Add a label for the y-axis

Boxplot

A boxplot is a graph that uses a box and whiskers to show the summary statistics of a numerical variable. A boxplot can help us see a numerical variable's median, quartiles, range, and outliers, and compare them across different levels or groups of a categorical variable.

# Boxplot of age by country

ggplot(student_data, aes(x = country, y = age, fill=country)) + # Define the data and the x-axis and y-axis variables

geom_boxplot() + # Add a boxplot layer

labs(title = "Boxplot of age by country", # Add a title

x = "Country", # Add a label for the x-axis

y = "Age") # Add a label for the y-axis

Boxplot of Satisfaction by Country

# Boxplot of satisfaction by country

ggplot(student_data, aes(x = country, y = satisfaction)) + # Define the data and the x-axis and y-axis variables

geom_boxplot() + # Add a boxplot layer

labs(title = "Boxplot of satisfaction by country", # Add a title

x = "Country", #Add a label for the x-axis,

y = "Satisfaction") # Add a label for the y-axis

Scatterplot

#Scatterplot of gpa vs sat

ggplot(student_data, aes(x = gpa, y = sat,color = country)) +

geom_point() +

geom_smooth(method = "lm") + # Add a smoothed line layer using a linear model

labs(title = "Scatterplot of gpa vs sat",

x = "GPA",

y = "SAT")

Identifying missing values and outliers in the data

- Missing values are values that are not recorded or available for a variable.

- Outliers are values that are unusually high or low compared to the rest of the data.

How do I identify missing values and outliers using R

To identify missing values and outliers in the data, I will use the following methods:

For missing values, I will use the is.na() function returns TRUE if a value is missing or FALSE. I will also use the na.omit() function, which removes rows with missing values from a dataset.

For outliers, I will use the boxplot() function, which shows the summary statistics of a numerical variable, and the outliers() function, which returns the values outside the boxplot range.

I will use the following code to identify missing values and outliers in the data:

# Identify missing values and outliers in the data # Check the number of missing values for each variable sapply(student_data, function(x) sum(is.na(x)))

# Function to identify outliers using the IQR method

identify_outliers <- function(data, variable) {

q1 <- quantile(data[[variable]], 0.25)

q3 <- quantile(data[[variable]], 0.75)

iqr <- q3 - q1

lower_bound <- q1 - 1.5 * iqr

upper_bound <- q3 + 1.5 * iqr

outliers <- data[[variable]][data[[variable]] < lower_bound | data[[variable]] > upper_bound]

return(outliers)

}

# Check for outliers in each numerical variable

lapply(c("age", "gpa", "sat", "toefl", "ielts", "gre", "satisfaction"),

function(variable) {

identify_outliers(student_data, variable)

})From this output, I can see no outliers for any of the numerical variables, which means that all the values are within the range of the boxplot. This may be because the data is generated from a normal distribution or is well-behaved and consistent. I will not need to deal with any outliers in this article.

Transforming and filtering the data

The next step of EDA is to transform and filter the data. Transforming and filtering the data are processes of modifying, creating, or selecting subsets of the data to make it more suitable for analysis. Transforming and filtering the data can help us create new variables, reduce noise, remove outliers, handle missing values, and focus on specific aspects of the data.

To transform and filter the data, I will use the following functions from the tidyverse package:

- mutate(): This function allows us to create or modify columns of a dataset by applying a formula or a function to existing columns.

- filter(): This function allows us to filter dataset rows based on a condition or a logical expression.

- select(): This function allows us to select columns of a dataset by name or by position.

- group_by(): This function allows us to group a dataset by one or more variables and apply a function to each group.

- summarize(): This function allows us to summarize a dataset by applying a function to each group or to the whole dataset.

- arrange(): This function allows us to arrange dataset rows by one or more variables in ascending or descending order.

# Transform and filter the data

# Create a new column called test_score, which is the average of the sat, toefl, ielts, and gre scores

student_data <- student_data %>%

mutate(test_score = (sat + toefl + ielts * 30 + gre) / 4)

# Filter the rows where the test_score is not missing

student_data <- student_data %>%

filter(!is.na(test_score))

# Select the columns id, country, gender, major, gpa, test_score, and satisfaction

student_data <- student_data %>%

select(id, country, gender, major, gpa, test_score, satisfaction)

# Group the dataset by country and major

student_data <- student_data %>%

group_by(country, major)

# Summarize the dataset by calculating the mean and standard deviation of the gpa, test_score, and satisfaction for each group

student_data <- student_data %>%

summarize(mean_gpa = mean(gpa),

sd_gpa = sd(gpa),

mean_test_score = mean(test_score),

sd_test_score = sd(test_score),

mean_satisfaction = mean(satisfaction),

sd_satisfaction = sd(satisfaction))

# Arrange the dataset by country and major in ascending order

student_data <- student_data %>%

arrange(country, major)

student_dataHypothesis Testing and Correlation Analysis

The next step of EDA is to perform hypothesis testing and correlation analysis.

Hypothesis testing and correlation analysis are statistical methods that can help us test the significance and strength of the relationships between the variables in the data. Hypothesis testing and correlation analysis can help us confirm or reject our hypotheses and measure the degree of association between the variables.

To perform hypothesis testing and correlation analysis, I will use the following functions:

- t.test(): It allows us to perform a t-test, a parametric test that compares the means of two groups or variables. A t-test can help us test the null hypothesis that the means are equal and the alternative hypothesis that the means are different.

- cor.test(): It allows us to perform a correlation test, which is a non-parametric test that measures the correlation coefficient between two variables. A correlation test can help us test the null hypothesis that the correlation is zero and the alternative hypothesis that the correlation is not zero.

# Perform hypothesis testing and correlation analysis

# Perform a t-test to compare the mean gpa of the students from China and India

t.test(gpa ~ country, data = filter(student_data, country %in% c("China", "India")))

Therefore, based on the t-test, there is no significant difference in the mean GPA of the students from China and India.

# Perform a correlation test to measure the correlation between the gpa and the test_score variables cor.test(student_data1$gpa, student_data1$test_score)

Conclusion

- Loading and inspecting the data

- Summarizing the data using descriptive statistics

- Visualizing the data using charts and graphs

- Identifying missing values and outliers in the data

- Transforming and filtering the data

- Performing hypothesis testing and correlation analysis

Limitations and Future Directions

- The dataset is synthetic and may not reflect the real situation of the students who applied for the master’s program at the university.

- It limits the validity and generalizability of the analysis and the findings. A future direction is to collect more real and complete data from the students who applied for the master’s program at the university and update the analysis accordingly.

- A future direction is to impute or remove the missing values or use other methods to handle them, such as multiple imputation or expectation maximization.

- The dataset only includes a limited number of variables, which may only capture some of the factors that influence the academic performance and satisfaction of the students. It limits the comprehensiveness and depth of the analysis and the findings.

- A future direction is to explore more variables that may be relevant to the admission process and student satisfaction, such as the extracurricular activities, the personal statement, the recommendation letters, and the feedback surveys.

Frequently Asked Questions (FAQs)

What is EDA?

EDA stands for exploratory data analysis, which explores and summarizes the data using descriptive statistics, charts, graphs, and other methods. EDA can help us understand the characteristics and relationships of the variables in the data and discover the patterns and insights from the data.

What is R?

R is a programming language and software environment for statistical computing and graphics. R can help us perform data analysis, visualization, manipulation, and modeling using various packages and functions.

What is R Markdown?

R Markdown is a document format that allows us to combine text, code, and output in a single file. R Markdown can help us create a reproducible and dynamic report documenting our data analysis process and results.

What is tidyverse?

tidyverse is a collection of R packages that share a common philosophy and design for data analysis. tidyverse can help us perform data transformation, filtering, visualization, modeling, and reporting using various functions and tools.

What is a t-test?

A t-test is a parametric test comparing the means of two groups or variables. A t-test can help us test the null hypothesis that the means are equal, and the alternative hypothesis that the means are different.

What is a correlation test?

A correlation test is a non-parametric test that measures the correlation coefficient between two

variables. A correlation test can help us test the null hypothesis that the correlation is zero, and the alternative hypothesis that the correlation is not zero.

What is a correlation coefficient?

A correlation coefficient is a numerical measure that indicates the strength and direction of the relationship between two variables. A correlation coefficient can range from -1 to 1, where -1 means a perfect negative relationship, 0 means no relationship, and one means a perfect positive relationship.

What is a p-value?

A p-value is a probability that measures the evidence against the null hypothesis. A p-value can range from 0 to 1, where 0 means strong evidence against the null hypothesis, and 1 means weak evidence against the null hypothesis. A common threshold for significance is 0.05, which means that if the p-value is less than 0.05, we can reject the null hypothesis and accept the alternative hypothesis.

What is a confidence interval?

A confidence interval is a range of values that estimates the true value of a parameter with a certain level of confidence. A confidence interval can help us quantify the uncertainty and variability of the estimate. A common confidence level is 95%, which means that if we repeat the experiment many times, 95% of the confidence intervals will contain the true value of the parameter.

What is a boxplot?

A boxplot is a graph that uses a box and whiskers to show the summary statistics of a numerical variable. A boxplot can help us see a numerical variable's median, quartiles, range, and outliers, and compare them across different levels or groups of a categorical variable.

Need a Customized solution for your data analysis projects? Are you interested in learning through Zoom? Hire me as your data analyst. I have five years of experience and a PhD. I can help you with data analysis projects and problems using R and other tools. You can visit this link and fill out the order form to hire me. You can also contact me at info@rstudiodatalab.com for any questions or inquiries. I will be happy to work with you and provide you with high-quality data analysis services.