Power of Logistic Regression in RStudio for Predictive Analysis

Key Points

- Unlock hidden insights with Logistic Regression in RStudio: A beginner's guide to predictive analytics.

- Master Logistic Regression in RStudio with step-by-step instructions and practical examples.

- Discover real-world applications of Logistic Regression: from medical research to fraud detection.

- Prepare your data for Logistic Regression: clean, select features, transform, and split.

- Evaluate and optimize your Logistic Regression model: accuracy, precision, and handling imbalanced data.

Introduction

Logistic regression using RStudio! In today's data-driven world, making predictions based on available data is crucial for businesses, researchers, and decision-makers. Logistic regression, a powerful statistical technique, enables us to predict outcomes by analysing various factors or predictors.

In this guide, we will learn the basics of logistic regression, including its applications and how to use it in RStudio.

By the end, you'll have the knowledge and skills to leverage logistic regression for predictive analytics.

Understanding Logistic RegressionSubtitle: A Beginner's Introduction to Logistic Regression

It's vital to grasp the fundamental concepts behind this statistical technique to embark on our journey into logistic regression. Logistic regression is a method used to model the relationship between one or more independent variables and a binary outcome.

Unlike linear regression, which predicts continuous outcomes, logistic regression indicates the probability of an event occurring.

In this article, we will learn the basics of logistic regression. Logistic regression is a way to analyze data and make predictions. We will also show you how to use it in RStudio, which is a computer program. We can make accurate predictions and gain valuable insights from our data by employing suitable predictors and statistical techniques.

In RStudio, logistic regression can be easily performed using the lm function. Let's use the Titanic dataset as an example to illustrate the implementation of logistic regression in RStudio.

Applications of Logistic Regression

Logistic regression finds extensive applications across various industries and fields. Here are a few examples where logistic regression proves invaluable:

- Medical Research: Logistic regression is widely used to predict the likelihood of a disease based on risk factors. It helps identify significant predictors and assists in disease diagnosis and treatment planning.

- Marketing: Logistic regression is an important tool in marketing analytics. It helps us determine how likely customers are to stop using a product or respond to a marketing campaign. It helps businesses optimize their marketing strategies and improve customer retention.

- Credit Risk Assessment: Logistic regression is employed in credit risk assessment to evaluate the probability of default on loans. It aids financial institutions in making informed lending decisions and managing risk effectively.

- Fraud Detection: Logistic regression is a useful tool in fraud detection. It helps businesses find and identify fraudulent transactions by looking at patterns and information. It helps minimize financial losses and safeguard against fraudulent activities.

- Social Sciences: Logistic regression is used in social sciences research to predict things like how people vote, if they have a job, or how well they do in school. It helps researchers gain insights into human behaviour and societal patterns.

Logistic Regression with R

Preparing Your Data

Before implementing logistic regression, it is crucial to prepare your data appropriately. This involves several steps to ensure that your data is in a suitable format and ready for analysis. Let's explore the key steps involved in data preparation for logistic regression:

Data Cleaning: Remove duplicate records, handle missing values, and address outliers that could impact the model's performance.

Feature Selection: Identify relevant predictors likely to influence the outcome and remove irrelevant or redundant variables.

Data Transformation: Convert categorical variables into dummy variables using one-hot encoding and standardize numerical features to ensure they are on a similar scale.

#Load the required packages for data preparation

library(dplyr)

library(tibble)

library(tidyr)

titanic <- read.csv("data (2).csv")

#Select relevant variables for analysis

titanic_df <- titanic %>%

select(Survived, Pclass, Sex, Age, Fare)

#Handle missing values

titanic_df <- titanic_df %>%

drop_na()

#Convert categorical variables to dummy variables

titanic_df <- titanic_df %>%

mutate(Sex = as.factor(Sex)) %>%

mutate(Pclass = as.factor(Pclass)) %>%

mutate(Survived = as.factor(Survived))

#Split the data into training and testing sets

set.seed(123)

train_indices <- sample(1:nrow(titanic_df), 0.7 * nrow(titanic_df))

train_data <- titanic_df[train_indices, ]

test_data <- titanic_df[-train_indices, ] Exploratory Data Analysis (EDA)

Exploratory Data Analysis (EDA) is a critical step in any data analysis project, including logistic regression. EDA allows us to understand the relationships between variables, identify patterns, detect outliers, and gain insights into the underlying structure of the data. By visualizing and summarizing your data, you can better understand the variables and their potential impact on the outcome. Let's explore various EDA techniques to extract meaningful information from your dataset:

Descriptive Statistics

Calculate measures such as mean, median, and standard deviation to summarize the distribution of variables.

Data Visualization

Create histograms, scatter plots, box plots, and other visualizations to visualize the relationships between variables and identify potential trends or patterns.

Correlation Analysis

Compute correlation coefficients to understand the strength and direction of relationships between variables.

Let's perform some EDA on the Titanic dataset.

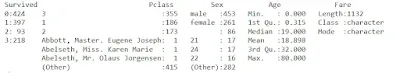

#Load the required packages for EDA library(ggplot2) library(ggpubr) #Descriptive statistics summary(titanic_df)

#Visualize the distribution of variables

ggplot(titanic_df, aes(x = Survived, fill = Survived)) +

geom_bar() +

ggtitle("Survival Distribution")

ggplot(titanic_df, aes(x = Age, fill = Survived)) +

geom_histogram(binwidth = 5) +

ggtitle("Age Distribution") After performing EDA, it's time to build our logistic regression model. In RStudio, we have access to powerful libraries such as "glm" (Generalized Linear Models) that simplify the implementation of logistic regression.

Let's walk through the step-by-step process of fitting the model, evaluating its performance, and interpreting the results. The following are the key steps involved in building a logistic regression model:

Model Formulation

Define the dependent variable (outcome) and the independent variables (predictors) based on your research question or problem statement. In our case, let's consider predicting the survival of passengers on the Titanic based on factors such as passenger class, sex, age, and fare.

#Build the logistic regression model

model <- glm(Survived ~ Pclass+Sex + Age + Fare, data = train_data, family = binomial)

#Print the summary of the model

summary(model) #Print the model coefficients

coefficients <- coef(model)

print(coefficients)

The glm function is used to fit the logistic regression model. The formula "Survived ~ Pclass + Sex + Age + Fare" specifies the relationship between the dependent variable "Survived" and the independent variables "Pclass," "Sex," "Age," and "Fare." The family = binomial argument indicates that we are performing logistic regression.

How to interpret the results of Logit Regression

The logistic regression model was built using the following formula:

Survived ~ Pclass + Sex + Age + Fare

The coefficients of the model indicate the relationship between the predictors and the likelihood of survival. Here are the key findings:

- Pclass (Passenger Class): For each unit increase in Pclass, the odds of survival decrease by a factor of approximately 0.32 (exp(-1.1458967)).

- Sex (Gender - Male): Being male reduces the odds of survival by a factor of approximately 0.09 (exp(-2.4483460)).

- Age: With each year's increase in age, the odds of survival decrease by a factor of approximately 0.96 (exp(-0.0373859)).

- Fare: The coefficient for Fare is not statistically significant (p-value > 0.05), suggesting that it may not significantly impact the odds of survival.

The significance codes indicate the level of statistical significance: "" (p-value < 0.001), "" (p-value < 0.01), "" (p-value < 0.05), "." (p-value < 0.1), and " " (p-value > 0.1).

The null deviance and residual deviance measure the goodness-of-fit of the model. The smaller the residual deviance, the better the model fits the data. In this case, the residual deviance is 467.97, indicating a reasonably good fit.

The Akaike Information Criterion (AIC) is used to compare models. The lower the AIC value, the better the model. The AIC value for this model is 477.97.

Based on the results, the variables Pclass, Sex, and Age have a statistically significant impact on the likelihood of survival, while Fare does not appear to have a significant effect. The model achieved these results after 4 iterations of Fisher Scoring. These findings provide insights into the factors influencing survival in the Titanic dataset and can be used to make predictions on new data.

Model Evaluation

Assess the model's goodness-of-fit by examining the significance of coefficients, overall model significance, and deviance.

#Evaluate the model's significance and goodness-of-fitanova(model, test = "Chi") #Interpret the model coefficientsodds_ratio <- exp(coefficients)print(odds_ratio)

The exp function calculates the odds ratio, which represents the change in odds for a one-unit change in the predictor variable.

Predictions and Decision Threshold

Apply the trained model to make predictions on new data by converting the calculated probabilities into binary outcomes using a decision threshold. #Predict the outcomes of the testing datapredictions <- predict(model, newdata = test_data, type = "response")

threshold <- 0.5

binary_predictions <- ifelse(predictions >= threshold, "Survived", "Not Survived")

Following these steps, you can build a logistic regression model that captures the relationships between predictors and outcomes, enabling accurate predictions.

Model Evaluation

After building a logistic regression model, evaluating its performance is crucial to ensure its accuracy and effectiveness. This section will explore evaluation metrics such as accuracy, precision, recall, and the receiver operating characteristic (ROC) curve. Let's delve into these metrics:- Accuracy: Calculate the proportion of correct predictions to overall predictions, providing a comprehensive measure of model performance.

#Calculate the accuracy of the model accuracy <- sum(binary_predictions == test_data$Survived) / length(test_data$Survived) print(accuracy)

- Precision and Recall: Precision measures the proportion of true positive predictions out of all positive predictions, while recall measures the proportion of true positive predictions out of all actual positive cases.

# Convert binary_predictions and test_data$Survived to factors binary_predictions <- as.factor(binary_predictions) test_data$Survived <- as.factor(test_data$Survived) # Convert binary_predictions and test_data$Survived to factors with the same levels binary_predictions <- factor(binary_predictions, levels = levels(test_data$Survived)) # Calculate confusion matrix confusion_matrix <- confusionMatrix(data = binary_predictions, reference = test_data$Survived)

The confusionMatrix function from the caret package calculates precision and recall based on the confusion matrix.

Receiver Operating Characteristic (ROC) Curve: The ROC curve plots the true positive rate (sensitivity) against the false positive rate (1 - specificity) at various decision thresholds. It provides a visual representation of the trade-off between sensitivity and specificity.

#Calculate the ROC curve and area under the curve (AUC) library(pROC) roc_obj <- roc(test_data$Survived, predictions) roc_curve <- roc_obj$roc auc <- auc(roc_curve) plot(roc_curve, main = "Receiver Operating Characteristic (ROC) Curve") legend("bottomright", legend = paste("AUC =", round(auc, 2)))

The roc function from the pROC package is used to calculate the ROC curve, and the auc function calculates the area under the curve (AUC). The plot function is then used to visualize the ROC curve.

By evaluating these metrics, you gain insights into the performance of your logistic regression model and can assess its accuracy and effectiveness.

Remember that deploying and using your logistic regression model is an iterative process. It's essential to continuously evaluate and update your model as new data becomes available and business requirements evolve.

Conclusion

In this comprehensive beginner's guide, we explored the power of logistic regression for predicting outcomes using data analysis. From understanding the basics to building and evaluating models, you have gained a solid foundation in logistic regression. By utilizing RStudio's capabilities and the Titanic dataset, you learned how to prepare your data, perform exploratory data analysis, build and evaluate your logistic regression model, handle imbalanced data, select features, address multicollinearity, and deploy your model for predictions.Remember, practice and experimentation are key to mastering any statistical technique. So, apply the knowledge you've gained here to your data analysis projects. Logistic regression opens up a world of possibilities for predictive analytics, enabling you to make informed decisions and uncover valuable insights from your data.

Do you need help with a data analysis project? Let me assist you! With a PhD and ten years of experience, I specialize in solving data analysis challenges using R and other advanced tools. Reach out to me for personalized solutions tailored to your needs.